Image Feature Extraction - Color Moment Indexing

This blog post will cover the current state of an image processing project I've been working on with a focus on content based image retrieval.

Project Goals:

As with most of the projects I post here, the goal is as much about building tools that perform a desired functions as much as they are about hands-on learning. So for this project I will be employing both convention methods such as color moment indexing and more sophisticated methods that use neural network architectures such as VGG16.

The main goals of this project in terms of desired functionality, is to build a toolkit that processes, analyzes and visualizes images in ways that allow me to label and cluster them based on their content and understand trends about the image datasets from a temporal perspective. For example how has the content in the images changed over time? I would like to use these tools for analyzing the many photos I take for photography based projects as well as employ these tools to analyze satellite imagery, since that is an area of geospatial research I'm exploring more as of late.

As of now, the tools I've made for this project consist of the following parts:

Project setup and data tracking

Image pre-processing

Image similarity analysis and clustering

Data visualization

We can begin by going over the project setup and data tracking tools. These some simple scripts written to automate project setup and help track data. Since images will be copied, resized and shuttled to different locations, it's important to have a well-structured system of keeping track of images and any data associated with them.

setup.py

The setup.py script, as the name suggests, is used to setup the workspace by creating any folders and subfolders needed.

Subfolders:

manifest

The manifests folder will store files that contain information about the images processed. These manifests are used to quickly recall image meta data without needing to reprocess an image, as well as the results of any statistical analysis performed on an image which is used in the indexing, similarity measurements and clustering.

images

The images folder will contain all the reformatted images we want to process. As we will see later, images are copied from their original location, resized and saved to this folder. This is done for to avoid potentially corrupting/damaging the original image should something happen during processing and to resize the images to a smaller size to reduce the data processing load.

images_grouped

After images have been processed, clustered and labeled, images are shuttled to folders based on the label they've been assigned by the k-means clustering algorithm.

paths.json

The paths.json file contains the paths to each of the newly created folders and is used as a reference file when declaring paths in other scripts.

shuttleImages.py

With the shuttleImages.py script, you can simply provide the directories of whatever images want to process by adding the directory paths to the "dirs_" list.

The script will cycle through the list, navigate to the directory and will proceed to read over each file in the directory.

The script will check if the file is an image. If the file is an image, the meta data such as the file's original name, size and date modified, will be written to the "imageStats_ref.json" file.

The image will then be resized according to the maximum dimensions provided by the user and shuttled to the "images" folder.

For the sake of consistency, the image is renamed so that all image names are of the same format and can easily be parsed later for other purposes.

The "imageStats_ref.json" file, contains both the new and original name of the image, so we can use this file whenever we need to get information about the original image. Additionally, since this reference file will be used later when we recall the date/time the original image was taken.

imageStats.py

The imageStats.py script reads over each image in the given directory and calculates color moments of the image. Color moments are a scale and rotation invariant way of describing the color distribution in an image. For an RGB image, color moments are calculated for each of the three color channels. The scale and rotation invariant nature of color moments means the size and rotation of the image does not matter when calculating the similarity between images, instead, the distribution of colors in the image are compared.

Color moments calculated for by the imageStats.py script are as follows:

Zeroth order - Mean

The average color value in the image, calculated by summing all the values in a given color channel, then dividing that sum by the total numbe of pixels in the image.

First-order - Variance

The variance measures the spread of colors in the image and provides an idea of how much the distribution of colors deviate from the mean.

Second-order - Skewness

Skewness measures the asymmetry of the distribution of values in a given color channel.

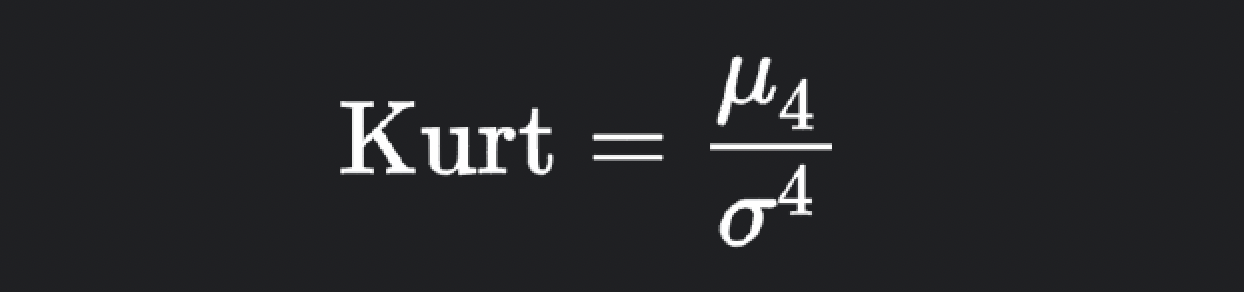

Third-order - Kurtosis:

Kurtosis measures length of the "tail" of the distribution. Kurtosis provides a more detailed understanding about the shape of the distribution such as whether the distribution is more peaked or more flat.

Color moments are calculated using the calc_color_moments function, which takes an image as it's input and returns a tuple of color moments for each color channel.

The code

The code of the imageStats.py script can be broken up into six main parts.

Part one - Define Functions

First we have the calc_color_moments function, which, as mentioned before is responsible for calculating color moments of an input image.

Next we have the calc_midpoint function, which is used when it comes time to generate a single point that will represent the image in 3D space. Since we are calculating color moments for each of the three color channels (red, green and blue), we will have three sets of data, each with three color moments. The color moment values are treated as X,Y,Z coordinates and used to generate a point in 3D space to represent the color moments of their respective channel. To generate a single point that represents all the color channels, we will calculate the midpoint between the three points. This function takes the coordinates of the three points as its input and returns a new point as X,Y,Z coordinates as a tuple.

Finally, the normalize_val function is used to normalize an input value between zero and one. Since color moments values can have significantly different ranges, we will want to normalize the data before treating the color moments as coordinates, that way we can plot them all in the same 3D space.

Part two - Calculate Color Moments

In this block of code, the script will read each image in the given image directory. Since the images in the given directory have been resized and saved as a new image, the date created and modified data is different from the original image. Since I want to keep the dates of the original image associated with the new, reduced size copy of the image, we can reference the "imageStats_ref.json" file to grab the dates of the original image. Next, the image is passed to the calc_color_moments function and the results are parsed. A new item with a unique image id is added to the imageStats_ dictionary, where we will add the color moment calculations to their respective channels. Notice that there are additional color moment values in the newly made dictionary item with a the "_norm" designation. These values are set to "None" by default, but will be replaced with the normalized value for each color moment later. In order to normalize the data, we need to get the data ranges so we append the repetitive lists in the "poolData_" dictionary with the color moments.

Part three - Calculate Data Ranges

As mentioned previously, we will need to normalize the color moment data prior to using the data as coordinates for plotting points in 3D space. In order to normalize the data between zero and one, we will need to get the data ranges for each color moment by calculating the minimum and maximum values seen across all the images processed. To do this, we can simply use the built-in min and max methos on the lists contained within the poolData_ dictionary.

Part four - Normalize Data

Now that we've calculated color moments and their respective data ranges, we can normalize the data. To do this we will iterate over the imageStats_ dictionary and pass the color moment values into the normalize_val function along with the appropriate minimum and maximum values. The function returns a float between zero and one which then replaces the default None value of the appropriate normalized color moment attribute.

Part five - Write Data

Finally, we can write the results to a json.

Test Run

For this example I'll be using a collection of travel photos I've taken over recent years. The image collection contains a total of 1055 photos taken in various places including, Paris, Spain, Norway, California and Texas. I'll be running the Python scripts on these images and visualizing the results with Rhino and Grasshopper. If the scripts work in the way I'm intending, I should be able to generate a visualization where similar images cluster next to one another in 3d space.

This is the Grasshopper definition used to generate the visualization. The definition is split into four parts.

01: Read the image stats JSON with the Grasshopper Python node and parse the results into the outputs (a,b,c,d,e,f,g)

02: Get the unique id of each image and visualize the image id at the centroid of where the image will be visualized in 3d space.

03: Construct 3d points calculated from the color moments of the image (see the imageStats.py script). At each point, generate a plane which will be used to map the image to. The plane should be oriented vertically along the X/Z axis.

04: Render images in 3d by mapping the image to the planes generated in part 03.

3D Image Cloud

Here is what the images look like once rendered in 3D space. It appears model is working, as we can see that images of similar color distributions are plotted in similar places in the cloud of images.

Let’s select some images from different places in the cloud and compare them.

These images are part of the same cluster in the image cloud. I've selected four images, two images taken at the Pompidou center in Paris and two of a full moon taken in Marfa Tx. There are obvious seminaries between the images taken in the same location/of the same subject matter, but we can also identify similarities between the four images even though their subject mater is different. The color schemes of the images is similar, with oranges, reds, blacks and blues making up the dominant colors across the images. The distribution of colors are also similar, most notably because of the circular nature of their distribution. The circular tube-like walkway in the Pompidou center has similar characteristics to the circular nature of the full moon. So mathematically these images would have relatively similar color moments which is why they appear in the same cluster of the image cloud.